AI Made Really, Really Simple

Reading the popular press and even some of the technical journals, you would think that our future robot overlords are preparing for the takeover, Siri and Alexa are about to form Club Skynet and the middle class is on the verge of being hollowed out. There is very little that humans can do that a deeply learned and well trained neural net cannot.

Reading the popular press and even some of the technical journals, you would think that our future robot overlords are preparing for the takeover, Siri and Alexa are about to form Club Skynet and the middle class is on the verge of being hollowed out. There is very little that humans can do that a deeply learned and well trained neural net cannot.

Driving some of this speculation is the use of scary and thrilling terms like ‘deep learning’ or ‘neural net training’. These anthropomorphic terms create an aura of mystery and a sense that we are about to cross into a world where machines gain consciousness. We are no where near that. I don’t want to come across as naysayer. What is going on is tremendous. I have an A.I. project myself. Ideas that have been around for 50 years finally have enough computer power to make them useful. It’s like we have calculus, now we can figure out how to fly rockets.

My goal is with as little math as possible, take some of the mystery (and hype) out of the A.I. discussion. I think this is important as people evaluate and make investment and career choices.

The Mugato

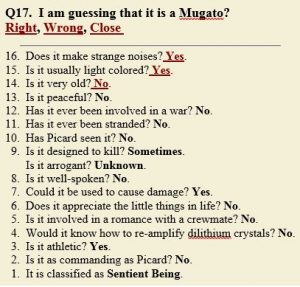

Any moderately skilled Star Trek trivia buff will recognize this creature as the Mugato from the original series (S2E19). I came across a website that claims to use neural net technology to play 20 Questions. (20q.net). It sounded impressive and a potentially a great demonstration of neural net prowess. There was a Star Trek category, a particular skill of mine.

I was game, I chose the Mugato to test its chops. Here are the questions it asked and my answers:

Doggonit! It got it right! In fact, it gets most things right or it gets close and figures it out. If it can play 20 Questions what can’t it do?

It’s these kinds of demonstrations that has everyone’s head spinning. But what’s really going here? How could it possibly be getting these trivia questions right by asking such bad questions?

Let’s Start With a Simple Example

Imagine you are climatologist stranded on an alien planet. The only instrument you were able to salvage from the wreckage was a thermometer. Being a climatologist, you are curious about the climate you find yourself in. Everyday, a few times a day, you take a temperature reading and mark it down on a graph. Initially there is not enough information to let you start making any kind of predictions about the weather.

Imagine you are climatologist stranded on an alien planet. The only instrument you were able to salvage from the wreckage was a thermometer. Being a climatologist, you are curious about the climate you find yourself in. Everyday, a few times a day, you take a temperature reading and mark it down on a graph. Initially there is not enough information to let you start making any kind of predictions about the weather.

It’s not clear what data should be ignored as an outlier, if the graph should be a straight line or some other function shape.

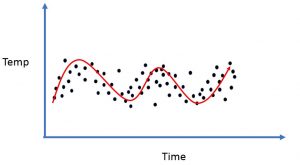

As a diligent scientist, you collect data for a long period of time. Now your picture looks this;

As a diligent scientist, you collect data for a long period of time. Now your picture looks this;

A pattern has emerged, and you took enough college math to create a function that will best fit the data. Now you have a tool that helps you make weather predictions. Given the date, you can predict temperature and with a temperature reading you can predict the date, or at least the season.

A pattern has emerged, and you took enough college math to create a function that will best fit the data. Now you have a tool that helps you make weather predictions. Given the date, you can predict temperature and with a temperature reading you can predict the date, or at least the season.

Did your graph paper ‘learn’ about the weather on this planet? Was it ‘trained’ on a data set? The answer is ‘sort of’. You would not characterize your weather tool as intelligent (artificial or otherwise). However, this is exactly how most A.I. tools work.

A.I. is inspired by nature. A neural net uses a set of interconnected simulated neurons like a brain. It takes input and extracts patterns from that input to produce output. This is where the inspiration from nature stops and math takes over.

A.I. is inspired by nature. A neural net uses a set of interconnected simulated neurons like a brain. It takes input and extracts patterns from that input to produce output. This is where the inspiration from nature stops and math takes over.

Turns out, that a neural net can approximate any function with any number of inputs and any number of outputs. You just need enough data, neurons and computer power. For those interested in the math, here is a link to an excellent article. For the moment, accept this as truth.

For example, our A.I. alien planet weather predictor takes an input, either time or temperature and produces a prediction. The 20 Questions Star Trek application takes a series of questions (yes or no inputs) and makes a prediction from a number of possible answers.

A More Complex Example

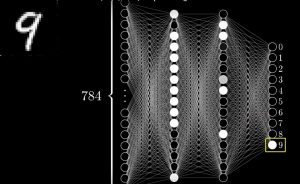

How does that work beyond a simple 2D graph? Let’s look at the classic A.I. 101 project of handwritten number recognition. In this example, handwritten digits are represented on a 28X28 pixel grid. That gives 784 different inputs. There are 10 outputs, one for each digit. For any set of 784 inputs the writing recognizer function generates a probability that the input matches any of the numbers. The higher the probability for any digit, the more likely that input represents that number. This is a statistical result no different than alien weather.

How does that work beyond a simple 2D graph? Let’s look at the classic A.I. 101 project of handwritten number recognition. In this example, handwritten digits are represented on a 28X28 pixel grid. That gives 784 different inputs. There are 10 outputs, one for each digit. For any set of 784 inputs the writing recognizer function generates a probability that the input matches any of the numbers. The higher the probability for any digit, the more likely that input represents that number. This is a statistical result no different than alien weather.

A random set of inputs will generate a low probability for all the digits and a set of inputs that more closely matches any of the digits will score higher. In order to build this model, it takes thousands of handwritten examples. If you look at the output from most A.I. systems they are expressed as a probability (89% chance this picture is a cat). Our weather tool is the same. It can only say that it is more likely that it is winter than summer for example. Our weatherman also took a lot of data to properly ‘train’ it. For a large number of inputs, say an image, the mathematics are complex. There are hefty doses of multidimensional calculus and linear algebra. However, at its core, it is simply finding the best probable fit given the input data it has been fed. Nothing more.

Going back to the Mugato, it has taken thousands upon thousands of players to build up the statistical model that can generate results with a high probability of being correct. Just like our weatherman and our handwriting number reader, the Star Trek 20 Questions player is a function that takes some number of inputs and can make a statistical inference that about the output. This inference is simply based on all the answers it has collected. If you are thinking about a Mugato, more people answered a certain sequence than others. Our brains fill in the wonder and the magic.

You mean there is no real learning going on? These systems are not getting ‘smarter’ with more data? No, they are like our weatherman, they will get statistically better at matching and predicting mathematically with more data points, but they are not ‘smarter’ in the sense that you and I understand.

Neural net A.I., machine learning, deep learning or what ever you want to call it, is not a model for cognition and, by it’s very nature will not lead to a cognitive model. It’s just not set up that way.

Neuroscientist Pascal Kaufmann scoffs at the notion that big data-powered deep learning algorithms have delivered intelligence of any type. “If you need 300 million pictures of cats in order to say something is a horse or cat or cow, to me that’s not so intelligent,” says the Swiss CEO of Starmind. “It’s more like brute-force statistics.” [August 10, 2017 DATANAMI]

Please comment if you disagree or believe I am oversimplifying. I hope this shed some light on the mystery.

In my next discussion, I will talk about potential paths to cognition.

Experiment in using genetic algorithms to evolve neural nets – https://danlovy.com/critter/